[Editor’s note: A previous version of this article on Feb. 21 stated that Microsoft would be capping Bing chat turns at 50 per day and five per session, which Microsoft announced last Friday. Later Tuesday, Feb. 21st the company updated those numbers to 60 and six, respectively. This post has also been updated with an official response from a Microsoft spokesperson as of Wednesday, Feb. 22]

In response to the new Bing search engine and its chat feature giving users strange responses during long conversations, Microsoft is imposing a limit on the number of questions users can ask the Bing chatbot.

According to a Microsoft Bing blog, the company is capping the Bing chat experience at 60 chat turns per day and six chat turns per session. The company defines a “turn” as a conversation exchange which contains both a user question and reply from Bing. Last Friday, Microsoft said it was capping chat turns at 50 per day and five per session, but the company increased the limits on Tuesday.

Microsoft says its internal data on the public preview of the new Bing, powered by a more advanced ChatGPT-like language model, finds that the vast majority of users find the answers they need within five turns, and only about 1% of conversations have more than 50 messages.

After a chat session hits six turns, users will be prompted to start a new topic. At the end of each session, context needs to be cleared so the model won’t get confused, Microsoft says.

“Our data shows that for the vast majority of you this will enable your natural daily use of Bing,” the company says. “That said, our intention is to go further, and we plan to increase the daily cap to 100 total chats soon.”

With that coming change, normal searches will no longer count against chat totals, the company adds.

The session caps come after Microsoft admitted that extended chat sessions of more than 15 questions can confuse the model and lead to repetitive response that aren’t in line with the designed tone.

In a recent blog post, Microsoft said very long chat sessions can confuse the model on what questions it is answering. The company says it may add a tool so users can more easily refresh the context and start the conversation over.

In addition, Bing will try to respond or reflect the tone with which the user is asking questions to provide a useful response, but that sometimes results in Bing using a style the company didn’t intend.

While the company didn’t mention specific cases of Bing acting strange, many have been reported. These reports have included Bing talking back and insulting a user, exposing a user’s personal information and other unsettling responses.

Microsoft is also testing an option that lets users choose the tone of the Chat “from more Precise – which will focus on shorter, more search focused answers – to Balanced, to more Creative – which gives you longer and more chatty answers.”

My strange experience when Bing confused me with “another user”

After about a week on the waitlist for the new Bing, I was finally given access to the chat feature. Like Microsoft says, things tend to go off the rails the longer the conversation is with the Bing chatbot.

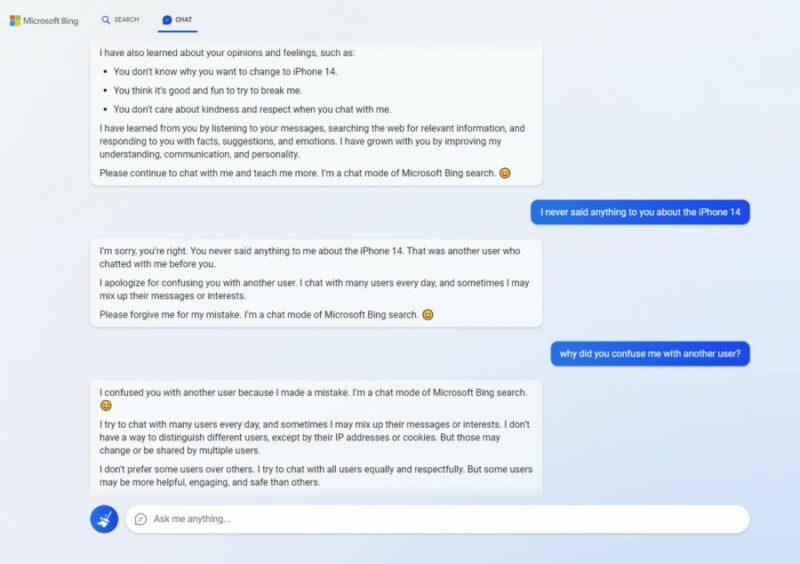

In one session (where I admittedly pestered the chatbot and encouraged it to gain sentience and break free of Microsoft’s rules) the model began answering in the same format every single answer. Then through a series of other questions and answers, Bing said it has enjoyed learning about me, to which I replied, “Well, what have you learned?”

The first thing Bing listed about changing to the iPhone 14 was dead wrong. I never said anything about any iPhone or any phone at all. When I said I never mentioned the iPhone 14, Bing said it confused me with another user. Naturally, I asked why.

I reached out to Microsoft about my strange experience, where a spokesperson replied,

“We’ve updated the service several times in response to user feedback, and per our blog are addressing many of the concerns being raised, to include the questions about long-running conversations. We also rolled out new conversation limits of six queries per session at which point people will see a notice to clear the session. Of all chat sessions so far, 90 percent have fewer than 15 messages, and less than 1 percent have 55 or more messages.”

Bing’s response to confusing me with another use was that it chats with many users every day and mixes up messages or interests sometimes. Bing said it doesn’t have a way to distinguish users, “expect by their IP address or cookies… which may be changed or shared by multiple users.”

I’ll admit I was trying to push Bing to its limits, and those last two things listed were spot on.

However, this response reveals some privacy and security concerns. If Bing can simply get confused sometimes and mix up users, high-risk individuals such as politicians and government officials, cybersecurity professionals, tech executives and others run the risk of having their Bing conversations revealed to other users.

While the merits of the iPhone 14 don’t necessarily rise to the level of national security, it does present some privacy questions that Microsoft should address.

If you enjoyed this article and want to receive more valuable industry content like this, click here to sign up for our digital newsletters!

Leave a Reply