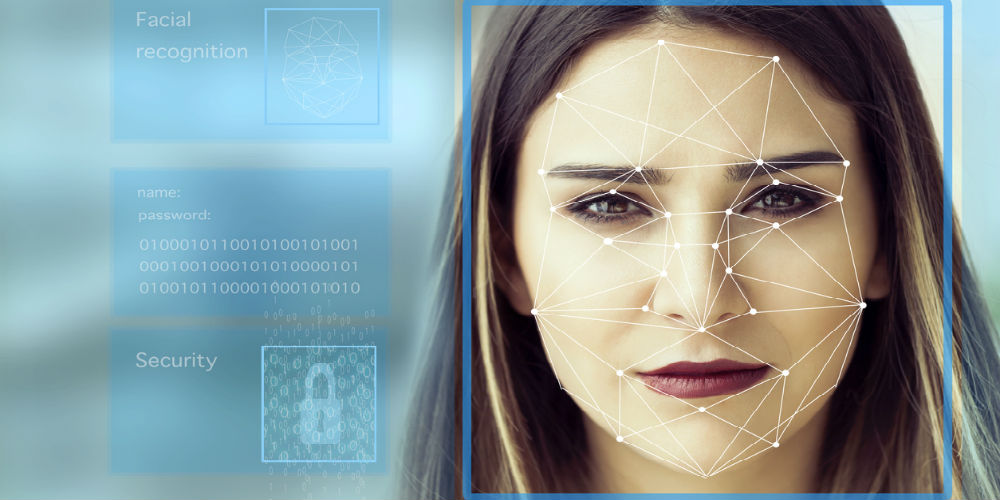

Facial recognition has experienced exponential growth over the past few years thanks to its partnership with artificial intelligence. Its rise has been defined not only by extraordinarily advanced technology but also by controversy and backlash regarding privacy violation and built-in bias.

The early developers of facial recognition began growing a database of faces that would feed the machine learning mechanism by having people come in, sign a form of consent, and taking their pictures. But why rely on such a cumbersome and expensive when you’ve got the internet, chock full of a diverse array of images and face.

The internet now provides gargantuan amounts of data for AI-based facial recognition technologies to comprehensively understand human facial features. “For the facial recognition systems to perform as desired, and the outcomes to become increasingly accurate, training data must be diverse and offer a breadth of coverage,” said IBM’s John Smith, in a blog post announcing the release of the data.

But the process of obtaining data is not always kosher. IBM, for instance, recently released a collection of almost a million photos that they had taken from Flickr, a photo hosting site, and annotated with details to help build their facial recognition algorithm.

“People gave their consent to sharing their photos in a different internet ecosystem,” said Meredith Whittaker, co-director of the AI Now Institute, which studies the social implications of artificial intelligence. “Now they are being unwillingly or unknowingly cast in the training of systems that could potentially be used in oppressive ways against their communities.”

John Smith, though, claims that the company is committed to “protecting the privacy of individuals” and “will work with anyone who requests a URL to be removed from the dataset.”

NBC News, however, found this to be a bit misleading. “IBM requires photographers to email links to photos they want removed, but the company has not publicly shared the list of Flickr users and photos included in the dataset, so there is no easy way of finding out whose photos are included,” they wrote. “IBM did not respond to questions about this process.”

IBM is not the lone culprit in such practices. Dozens of other companies looking to get an edge in the race towards the best facial recognition technology are skirting the lines of privacy and consent.

If you enjoyed this article and want to receive more valuable industry content like this, click here to sign up for our digital newsletters!

Leave a Reply